Let’s be real: hiring has always been a messy, biased, and often unfair game. A manager rejects a candidate for a typo. A recruiter hires someone from their alma mater while ignoring a self-taught genius. A company faces lawsuits because their hiring patterns look like a demographics horror show. These aren’t just “oops” moments—they’re systemic …

Let’s be real: hiring has always been a messy, biased, and often unfair game.

A manager rejects a candidate for a typo. A recruiter hires someone from their alma mater while ignoring a self-taught genius. A company faces lawsuits because their hiring patterns look like a demographics horror show. These aren’t just “oops” moments—they’re systemic failures.

Enter AI. Not as a hero, but as that brutally honest friend who calls out your BS. Here’s how it’s dragging accountability into the light—kicking and screaming:

1. “Gut Feelings” Get a Reality Check

We’ve all heard it: “I didn’t vibe with them.”

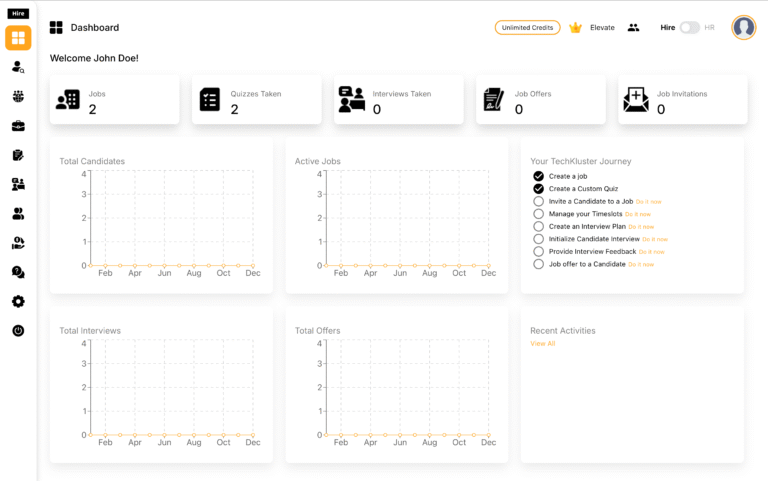

AI says: “Cool story. Let’s see the data.” Modern tools force recruiters to justify decisions by matching candidates to pre-set criteria (think: “Did they nail the coding test? Yes/No”). If a hiring manager rejects someone who aced every skill, the system raises an eyebrow: “Explain why Karen from Marketing trashed the engineer who solved the test in record time.”

Real-world chaos:

A retail giant cut biased rejections by 40% after AI exposed managers were axing resumes with “ethnic” names. Ouch.

2. Audit Trails: The Receipts Don’t Lie

Ever tried arguing with a spreadsheet?

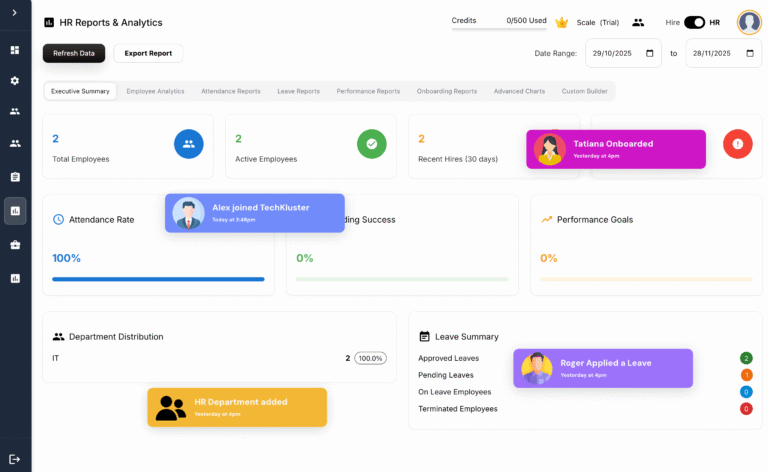

AI logs every move—who shortlisted, who rejected, why. When a candidate demands answers, recruiters can’t hide behind “We found a better fit.” Instead, it’s: “Your coding score was bottom 20%,” or “Your portfolio had zero mobile app examples.” Brutal? Maybe. But at least it’s honest.

Plot twist:

A startup dodged a lawsuit by proving a rejection was skills-based, not because the candidate was a 50-year-old career switcher.

3. Compliance Without the Nightmare Fuel

GDPR. NYC’s AI laws.

Compliance feels like juggling flaming torches. AI automates the snooze-fest: anonymizing resumes, redacting birthdays, and flagging job posts that scream “bro culture” (“ninja wanted” = 🚩). It even auto-generates reports for regulators—so you’re not scrambling at midnight before an audit.

Fun(ish) fact:

65% of companies say AI compliance tools are cheaper than lawyer fees for bias lawsuits.

4. Feedback That Doesn’t Sound Like a Horoscope

Candidates know the drill:

“We went with someone more experienced” = “We hired the CEO’s nephew.” AI swaps vagueness for cold, hard truths: “You scored 20% below the benchmark on the crisis simulation,” or “Your cloud experience was lighter than a TikTok tutorial.”

Bonus:

72% of candidates prefer blunt AI feedback over corporate fluff.

The Catch? AI Isn’t a Fairy Godmother

For every win, there’s a facepalm moment.

That AI tool that loved Ivy Leaguers because the training data was 90% privileged grads? Or the video screener that tanked accents? Garbage in, garbage out.

How to not screw it up:

Audit training data like your career depends on it (“Why do we only hire ex-FAANG?”).

Pair AI with humans who ask “Wait, why’d it reject all the moms with resume gaps?”

Ditch tools that “explain” decisions in robot hieroglyphics. Demand plain English.

The Future? Less Trust Falls, More Trust Built

AI won’t fix hiring—but it’s holding a mirror to our worst habits. Companies leaning in see fewer lawsuits, faster hires, and candidates who believe the process is fair (even when rejected).

Hot take:

If your hiring team fears AI, they’re not scared of the tech. They’re scared of being exposed.